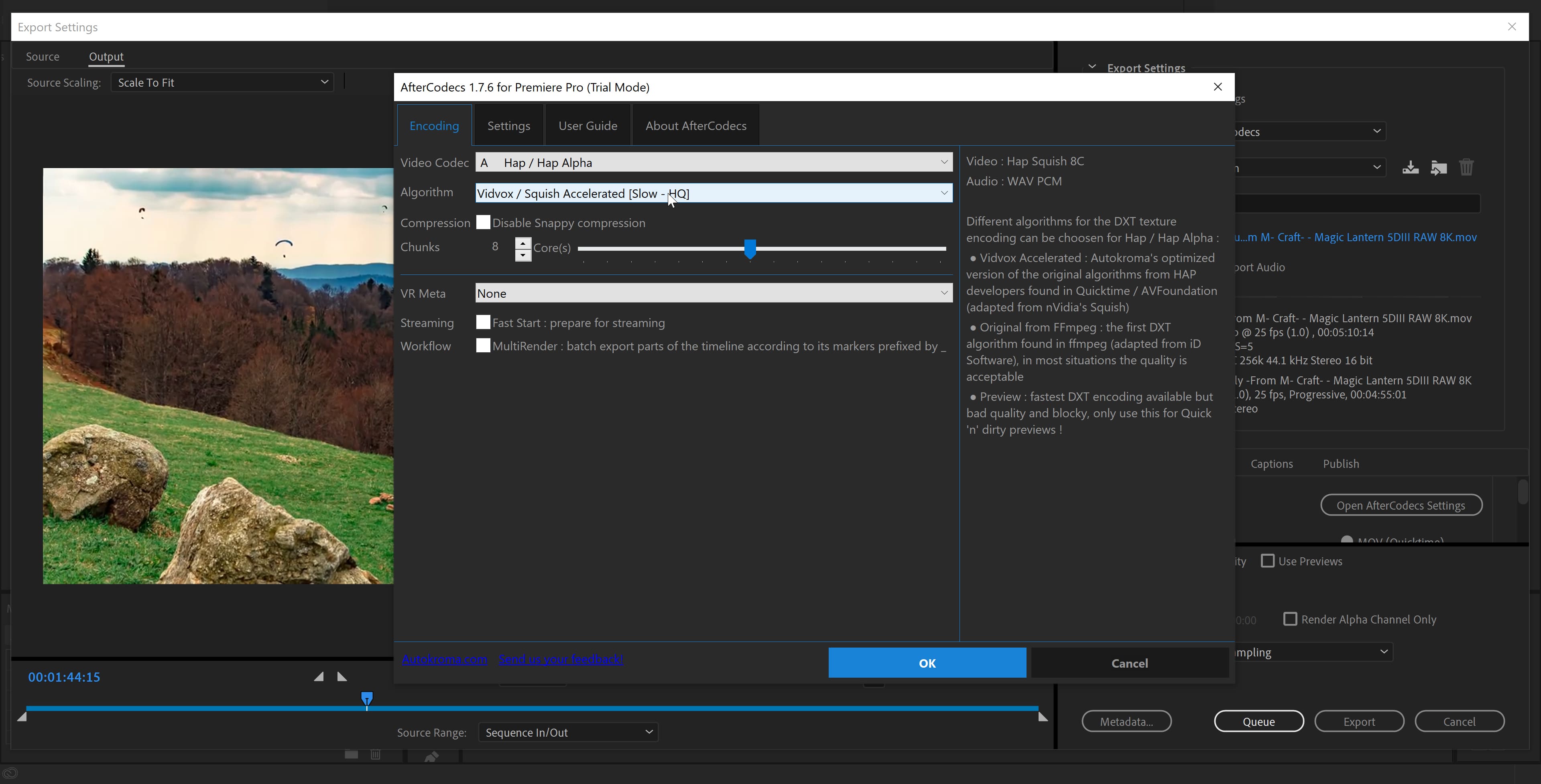

The one thing to note is, we only recompress the records only when the given record batch is compressed with the different codec from the topic's compression settings - it does not care about the detailed configurations. Like the present, the broker will use the newly introduced compression settings to recompress the delivered record batch. The user can apply a detailed compression configuration into the producer Since the default values are set to the currently used values, it will make no difference if the user doesn't make an explicit change. (available: 0 or, default: 0 (disables long mode.))Īll of the above are different but somewhat in common from the point of compression process in that it impacts the memorize size during the process. : enables long mode the log of the window size that zstd uses to memorize the compressing data.(available:, (means 64kb, 256kb, 1mb, 4mb respectively), default: 4.) : the buffer size that feeds raw input into the Deflator or is fed by the uncompressed output from the Deflator.It adds the following options into the Producer, Broker, and Topic configurations: This feature opens all possibility of the cases described above. For example, the user can take a speed-first approach compressing messages to the regional Kafka cluster and compressing the data extremely in the aggregation cluster to keep them over a long period.

The more data is compressed, the more easily transferred within the computer network and stored in a disk with less footprint, with a longer retention period. The bigger and more data feeds into the event-driven architecture, the more multi-region Kafka architecture gains popularity, and Apache Kafka is working as a single source of the truth, not a mere messaging system. Making finely-tune the compression behavior is so important with the recent trend. However, In the case of Apache Kafka, it has been allowed to compress the data with the default settings only, and only the compression level made configurable in KIP-390 (not shipped yet as of 3.0.0.). There are many kinds of compression codecs with intrinsic mechanisms generally, they provide various configuration options to cope with diverse kinds of input data by tuning the codec's compression/decompression behavior. MotivationĪs a following work of KIP-390: Support Compression Level, this proposal suggests adding support for per-codec configuration options to Producer, Broker, and Topic configurations, which enable a fine-tuning of compression behavior. Please keep the discussion on the mailing list rather than commenting on the wiki (wiki discussions get unwieldy fast).

0 kommentar(er)

0 kommentar(er)